Simone Luchini

Postdoctoral Researcher

Hi, I am Simone! I study creativity in humans and artificial systems. My work intersects cognitive psychology, neuroscience, and artificial intelligence. I am currently a postdoctoral researcher at the Paris Brain Institute, working with Dr. Emmanuelle Volle and Dr. Alizée Lopez-Persem. I conducted my PhD at Penn State University, working with Dr. Roger Beaty. I have also been a visiting student at the labs of Dr. Emmanuelle Volle at the Paris Brain Institute, and Dr. Bodong Chen at the University of Pennsylvania.

My research fits into three related categories. (1) Understanding the neural (brain) systems that support creative thinking, leveraging neuroimaging (e.g., fMRI, fNIRS) and neuromodulation techniques (e.g., neurofeedback, tDCS, tACS). (2) Defining the cognitive systems that underlie creativity, employing network science, factor analytic techniques, longitudinal analyses, and more. (3) Exploring how representations and creative behaviors of humans compare to those of AI (primarily Large Language Models), to develop artificial systems capable of human-like creativity. You can read about some selected projects from these lines of research below.

Creativity Neuroscience

This line of research investigates the neural substrate of creative thinking in humans. Studies leverage a variety of neuroimaging techniques, such as fMRI and fNIRS, often in combination with neuromodulatory techniques like neurofeedback or brain stimulation. I am particularly interested in tying the neural mechanisms that support creative thinking to specific cognitive processes, to shed light on the nature of creativity.

June 2025

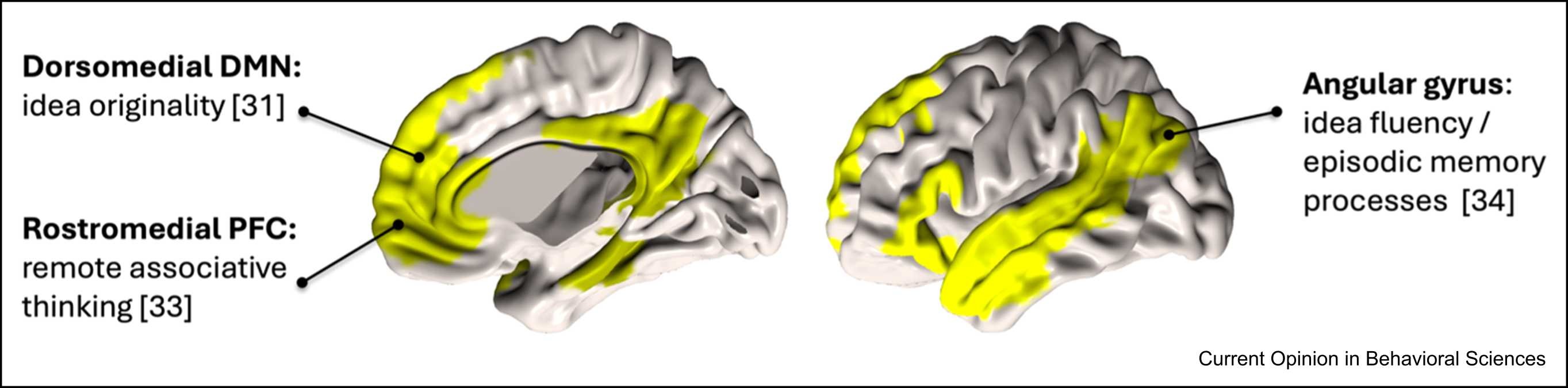

The role of the default mode network in creativity

Luchini, S. A., Volle, E., & Beaty, R. E. (2025). The role of the default mode network in creativity. Current Opinion in Behavioral Sciences, 65, 101551.

How does the default mode network (DMN) contribute to creativity? Decades of research have explored this question, employing increasingly advanced methodologies and updated theoretical frameworks. In our review, we first summarize past work linking DMN and creativity, and outline current trends in neuroscientific research of creativity. We emphasize four promising research directions for advancing our understanding of the DMN's role in creativity: (1) its causal involvement in creative thinking processes, (2) its contribution to the processes of remote associative thinking and (3) to the processes of creative idea evaluation, and (4) its capacity to functionally integrate diverse information from distant brain regions.

Read more

Read more

May 2025

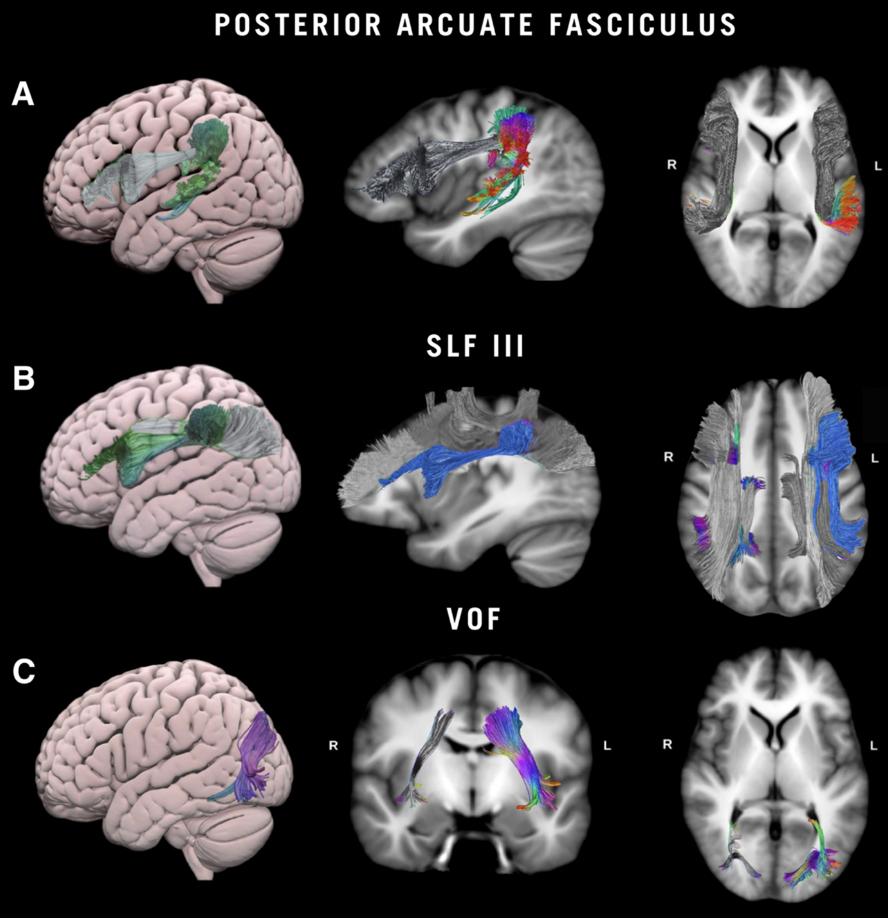

The White Matter of Aha! Moments

Salvi, C., Luchini, S. A., Pestilli, F., Hanekamp, S., Hope, T., Parrish, T., ... & Grafman, J. (2025). The White Matter of Aha! Moments. BMC psychology.

Individuals can solve creative problems via step-by-step (analytical) thinking or through insight ("Aha!" moments). Studies have identified widespread functional brain activity involved in insight problem-solving, yet, the white matter substrate of insight remains unexplored. In this study, we employed DTI to investigate how white matter microstructure relates to insight versus step-by-step analytical reasoning. After controlling for age and gender, insight was found to be linked to lower FA in the left posterior Arcuate Fasciculus and bilateral Superior Longitudinal Fasciculi III. Conversely, step-by-step reasoning was linked to higher FA in the left Vertical Occipital Fasciculus and higher FA in the anterior corpus callosum. This work exposes distinct structural connectivity patterns associated with different modes of creative problem-solving, contributing to our understanding of the neural architecture supporting creative cognition.

Read more

Read more

April 2025

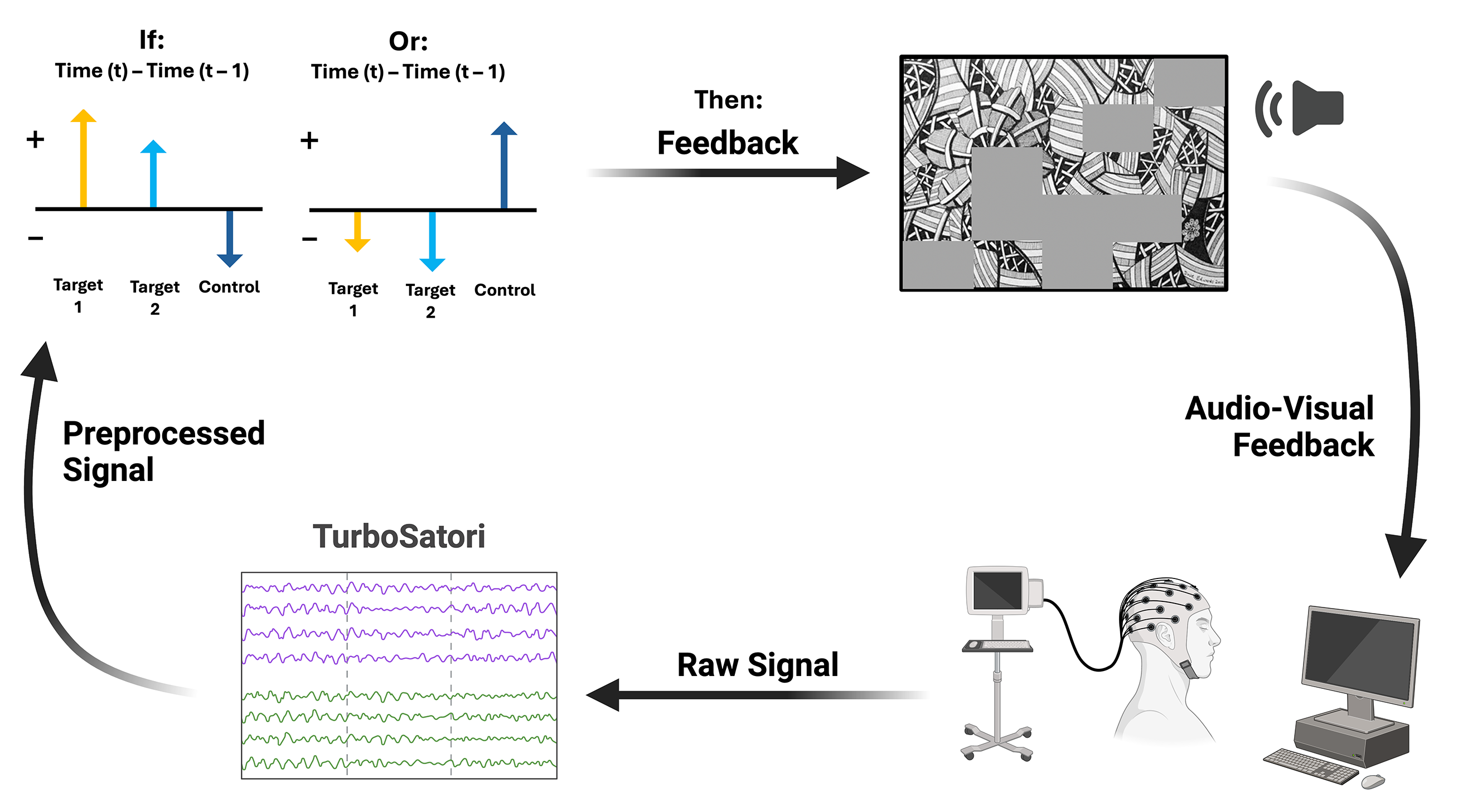

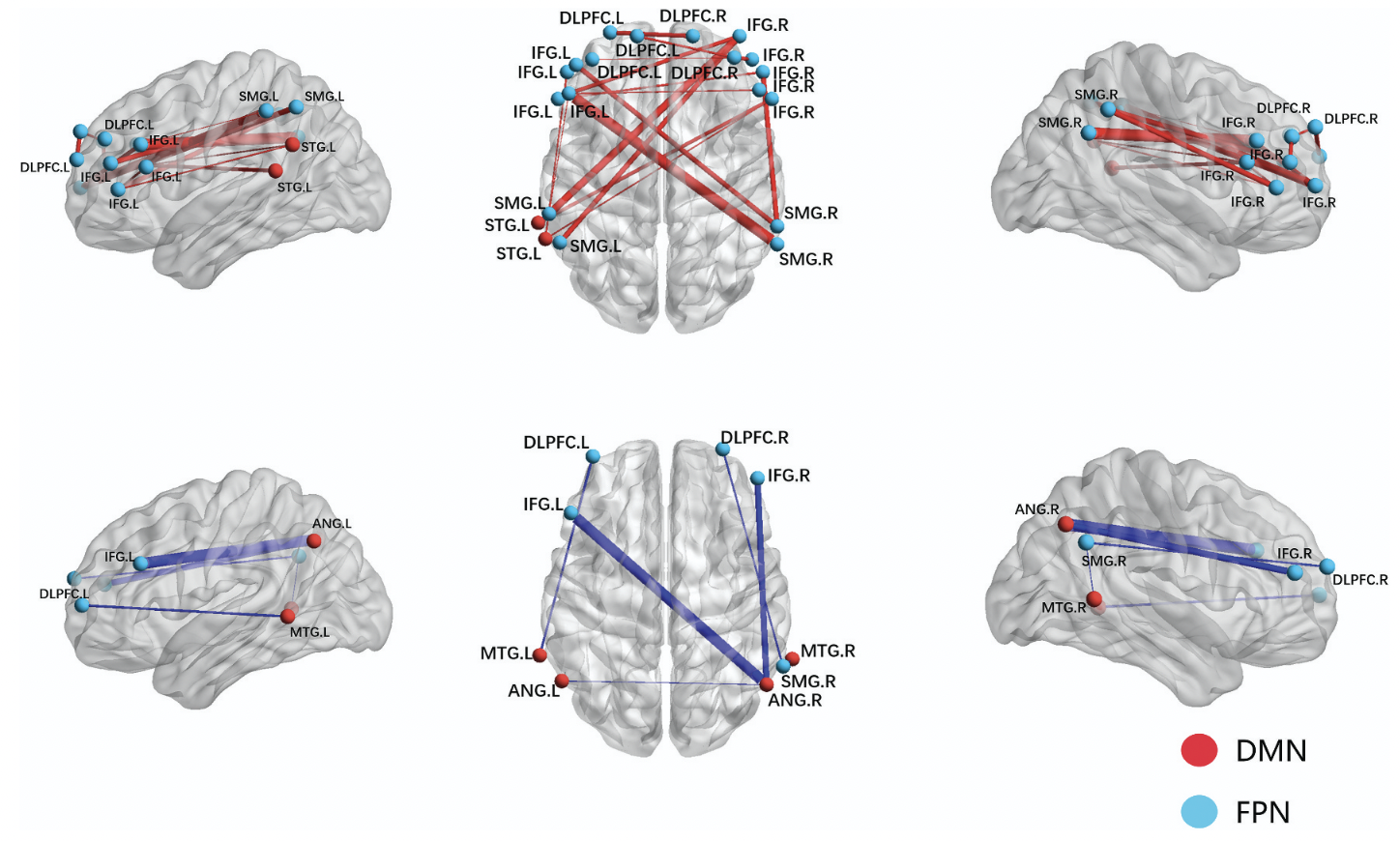

Enhancing Creativity with Covert Neurofeedback: Causal Evidence for Default-Executive Network Coupling in Creative Thinking

Luchini, S. A., Zhang, X., White, R. T., Lührs, M., Ramot, M., & Beaty, R. E. (2025). Enhancing creativity with covert neurofeedback: causal evidence for default-executive network coupling in creative thinking. Cerebral Cortex, 35(4), bhaf065.

Correlational evidence has linked creativity to coupling between the Default Mode Network (DMN) and Executive Control Network (ECN). In this study, we leveraged covert neurofeedback to endogenously modify functional connectivity between DMN and ECN without the participants' knowledge. We compared this to a control neurofeedback condition, entraining coupling between medial prefrontal cortex and supplementary motor area. Approximately 24 hours after neurofeedback, DMN-ECN neurofeedback led to increased coupling between these networks during a creative thinking task (generating creative object uses), extending to broader DMN regions. Behaviorally, we observed a double dissociation: the DMN-ECN neurofeedback increased idea originality, while the control neurofeedback improved go/no-go reaction times. We thus provide the first evidence that DMN-ECN coupling causally enhances creative performance.

Read more

Read more

April 2023

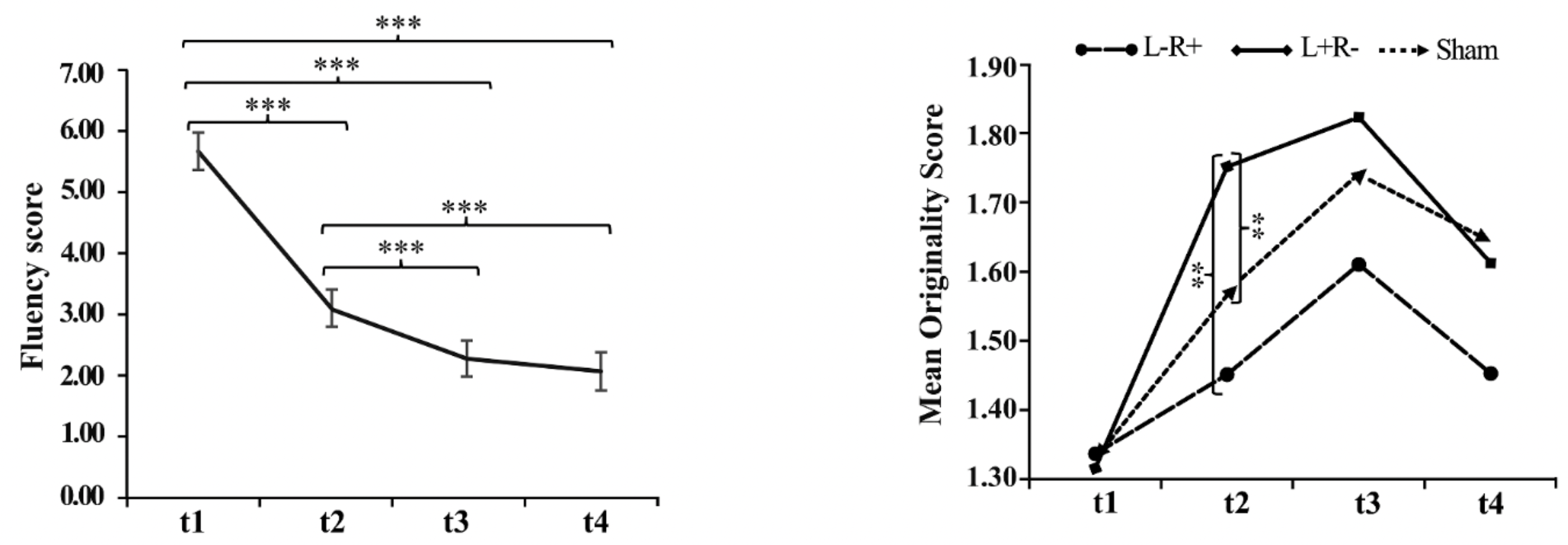

Accelerating Creativity: Effects of Transcranial Direct Current Stimulation on the Temporal Dynamics of Divergent Thinking

Li, Y., Beaty, R. E., Luchini, S., Dai, D. Y., Xiang, S., Qi, S., ... & Hu, W. (2023). Accelerating creativity: effects of transcranial direct current stimulation on the temporal dynamics of divergent thinking. Creativity Research Journal, 35(2), 169-188.

tDCS over the DLPFC enhances creative thinking, but the temporal dynamics and underlying cognitive mechanisms remain unclear. We investigated how this tDCS affects convergent and divergent thinking, focusing on the serial order effect (ideas becoming more original over time) and the role of cognitive inhibition. In a within-subjects design, participants received three types of cross-hemispheric tDCS (left cathodal/right anodal, L-R+; left anodal/right cathodal, L+R-; and sham) over the DLPFC. They completed flanker tasks measuring cognitive inhibition, plus the AUT measuring divergent thinking and RAT measuring convergent thinking. Results showed that L+R- stimulation significantly enhanced originality in the AUT compared to sham, with no effect on the RAT. The L+R- condition also showed a diminished serial order effect, with accelerated production of original ideas, and was accompanied by better flanker task performance. These findings suggest that L+R- tDCS over the DLPFC accelerates idea originality, with cognitive inhibition potentially mediating the enhancement in divergent thinking.

Read more

Read more

August 2022

Automated creativity prediction using natural language processing and resting-state functional connectivity: an fNIRS study

Xie, C., Luchini, S., Beaty, R. E., Du, Y., Liu, C., & Li, Y. (2022). Automated creativity prediction using natural language processing and resting-state functional connectivity: an fNIRS study. Creativity Research Journal, 34(4), 401-418.

Creative thinking relies on large-scale brain connectivity. Specifically, functional coupling of the DMN and ECN has been linked to creative performance. In this study, we show for the first time that brain signals captured with fNIRS can be used to predict creative performance measured via an automated computational method. We also find that dynamic resting-state functional connectivity is twice as effective at predicting creative performance, as opposed to static resting-state functional connectivity. We extend past work in the network neuroscience of creativity by showing that stable predictions of objective creative performance can be derived from dynamic fNIRS signals.

Read more

Read more

Creative Cognition

This line of research investigates the cognitive underpinnings of creative thinking in humans. Studies leverage a variety of methodologies, such as network science, factor analytic techniques, and longitudinal studies. I am particularly interested in uncovering the role of associative memory processes on creativity, as well as tying apart the influences of spontaneous and goal-directed influences on creativity.

July 2025

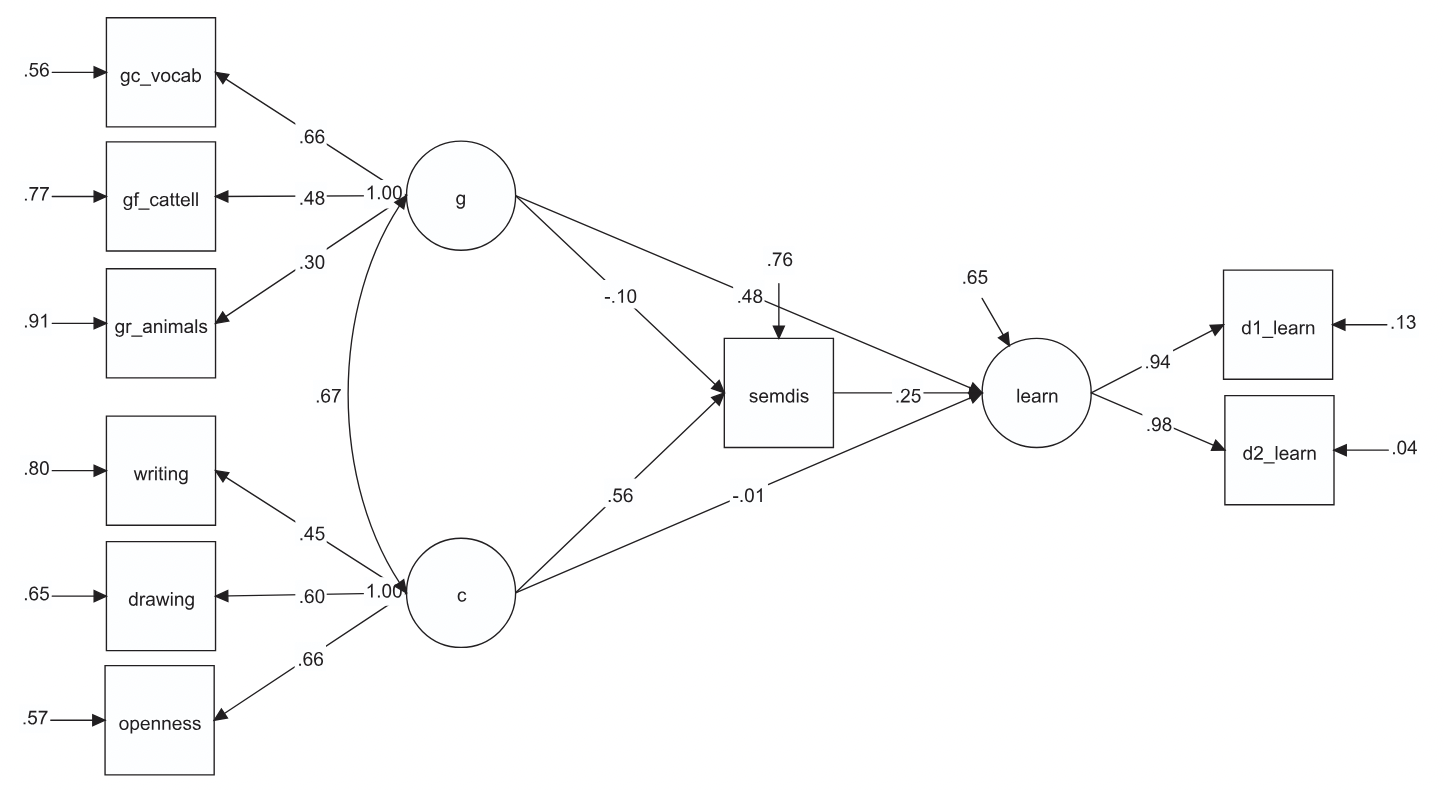

Creativity supports learning through associative thinking

Luchini, S. A., Kaufman, J. C., Goecke, B., Wilhelm, O., Kenett, Y. N., Lei, D., ... & Beaty, R. E. (2025). Creativity supports learning through associative thinking. npj Science of Learning, 10(1), 42.

Code and data available here.

Code and data available here.

Creativity has consistently been linked to academic learning outcomes. Despite decades of research on creativity and learning, little is known about the cognitive mechanisms underlying their relationship. In two studies, we examined whether creativity supports associative learning through associative thinking—the ability to generate novel word associations—an ability central to creativity which has not been previously tied to associative learning. In Study 1, we found that students who generated more novel word associations learned more words on a foreign language learning test 24 h later. In Study 2, we replicated and extended the effect to naturalistic creativity tasks (i.e., writing short stories and sketching line drawings), finding associative thinking mediated the relationship between creativity and associative learning. Importantly, both studies controlled for general intelligence. Our findings suggest that creativity’s contribution to learning operates partly through a shared cognitive capacity for making new connections.

Read more

Read more

June 2025

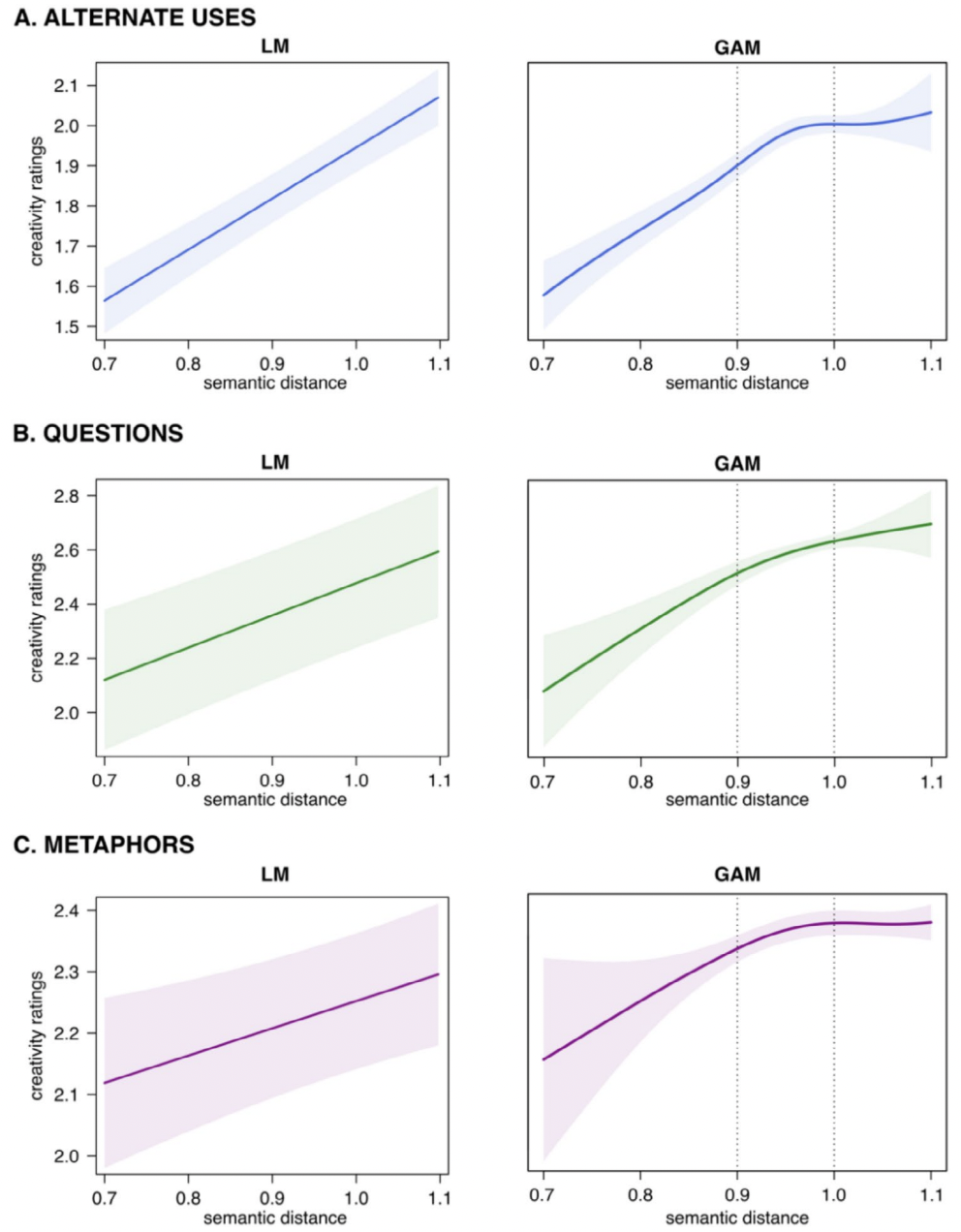

A ‘Sweet Spot’ for Creative Ideation

Orwig, W., Luchini, S. A., Beaty, R. E., & Schacter, D. L. (2025). A ‘Sweet Spot’for Creative Ideation: Non‐Linear Associations Between Semantic Distance and Creativity. The Journal of Creative Behavior, 59(3), e70041.

Code and data available here.

Code and data available here.

What is the precise relationship between semantic distance and creativity? Semantic distance is the degree of conceptual dissimilarity between words. In creativity, this is typically calculated between the task prompt and participant response. Semantic distance and creativity links have traditionally been explored using linear models, with the embedded assumption that as semantic distance increases, so does the creative quality of ideas. However, informal observations would suggest that distant associations may sometimes become too incoherent or nonsensical to be considered creative. Using generalized additive models (GAMs), we explored the non-linear nature of this relationship across three divergent thinking tasks: alternate uses, question asking, and metaphor generation. Our results revealed a consistent pattern: human ratings of creativity increased with semantic distance up to a certain threshold, after which point, additional semantic distance did not translate into more subjectively creative ideas. These findings provide a more nuanced understanding of the interplay between semantic distance and creativity than previously available, suggesting that the relationship is best characterized as curvilinear rather than linear.

Read more

Read more

August 2024

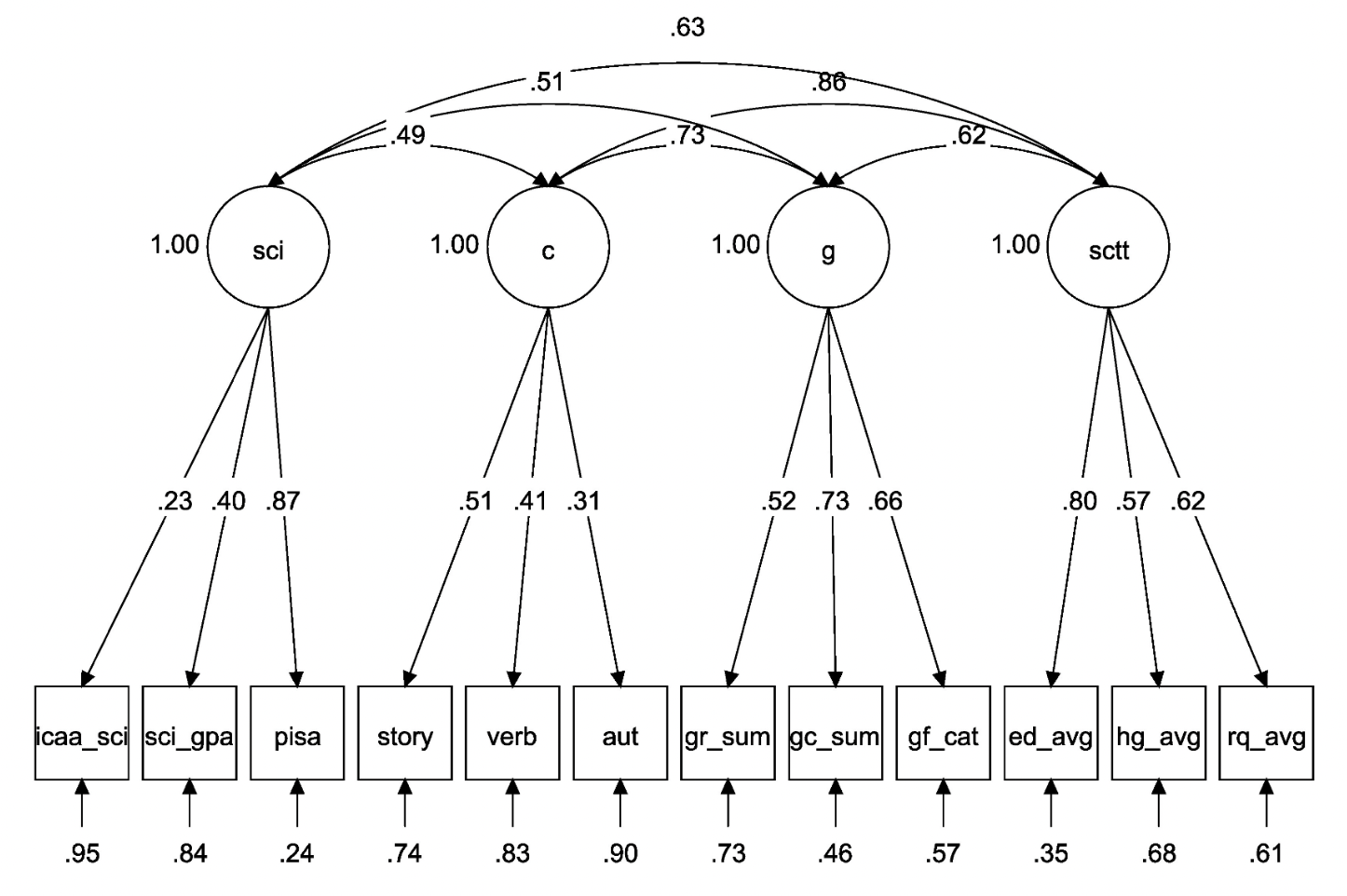

The Scientific Creative Thinking Test (SCTT): Reliability, Validity, and Automated Scoring

Beaty, R., Cortes, R. A., Luchini, S., Patterson, J. D., Forthmann, B., Baker, B. S., ... & Green, A. (2024). The scientific creative thinking test (SCTT): Reliability, validity, and automated scoring. PsyArXiv.

Creative thinking is a primary driver of innovation in science, technology, engineering, and math (STEM), allowing students and practitioners to generate novel hypotheses, flexibly connect information from diverse sources, and solve ill-defined problems. To foster creativity in STEM education, there is a crucial need for assessment tools for measuring STEM creativity that educators and researchers can apply to test how different teaching approaches impact scientific creativity in undergraduate education. In this work, we introduce the Scientific Creative Thinking Test (SCTT). The SCTT includes three subtests that assess cognitive skills important for STEM creativity: generating hypotheses, research questions, and experimental designs. In five studies with young adults, we demonstrate the reliability and validity of the SCTT as well as measurement invariance across race/ethnicity and gender. In addition, we present a method for automatically scoring SCTT responses, training the large language model Llama 2 to produce originality scores that closely align with human ratings.

Read more

Read more

May 2024

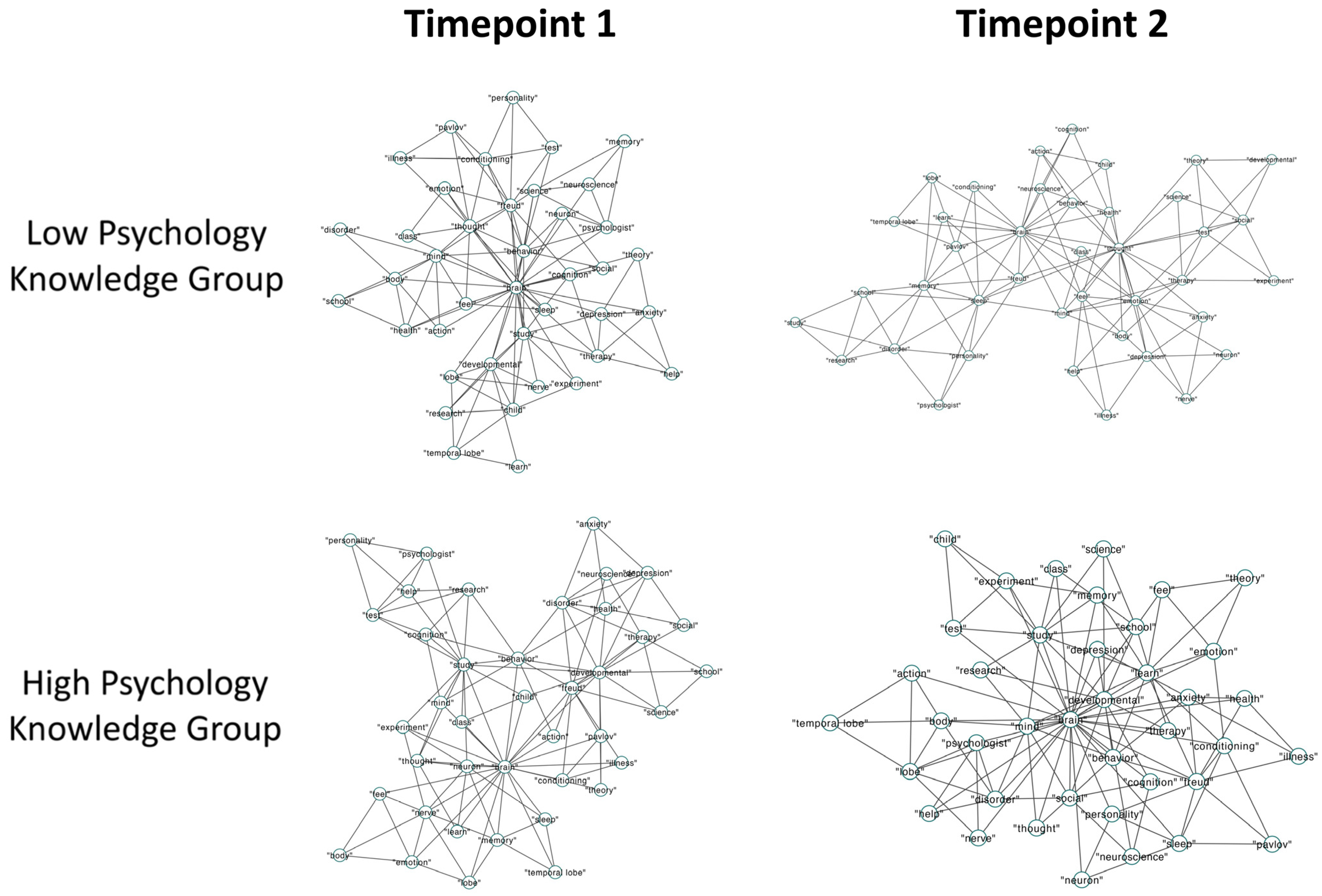

Mapping the Memory Structure of High-Knowledge Students: A Longitudinal Semantic Network Analysis

Luchini, S. A., Wang, S., Kenett, Y. N., & Beaty, R. E. (2024). Mapping the memory structure of high-knowledge students: A longitudinal semantic network analysis. Journal of Intelligence, 12(6), 56.

Code and data available here.

Code and data available here.

In this paper we explored the relationship between semantic network and learning across two studies in undergraduate students enrolled in an introductory psychology course. We administered a cumulative multiple-choice test of psychology knowledge and estimated semantic networks across two domains: domain-specific (psychology) and domain-general (animals). Based on performance on the psychology test, we categorized students into a high-knowledge or low-knowledge group and compared their semantic networks. We found that the high-knowledge group had semantic networks that were more clustered, with shorter distances between concepts compared to the low-knowledge group. We also found the semantic networks of high-knowledge students became more interconnected over time. Successful learners show a distinct semantic network—characterized by high connectivity and short path distances between concepts—both domain-general and domain-specific, highlighting the utility of cognitive network science for studying variation in student learning.

Read more

Read more

April 2024

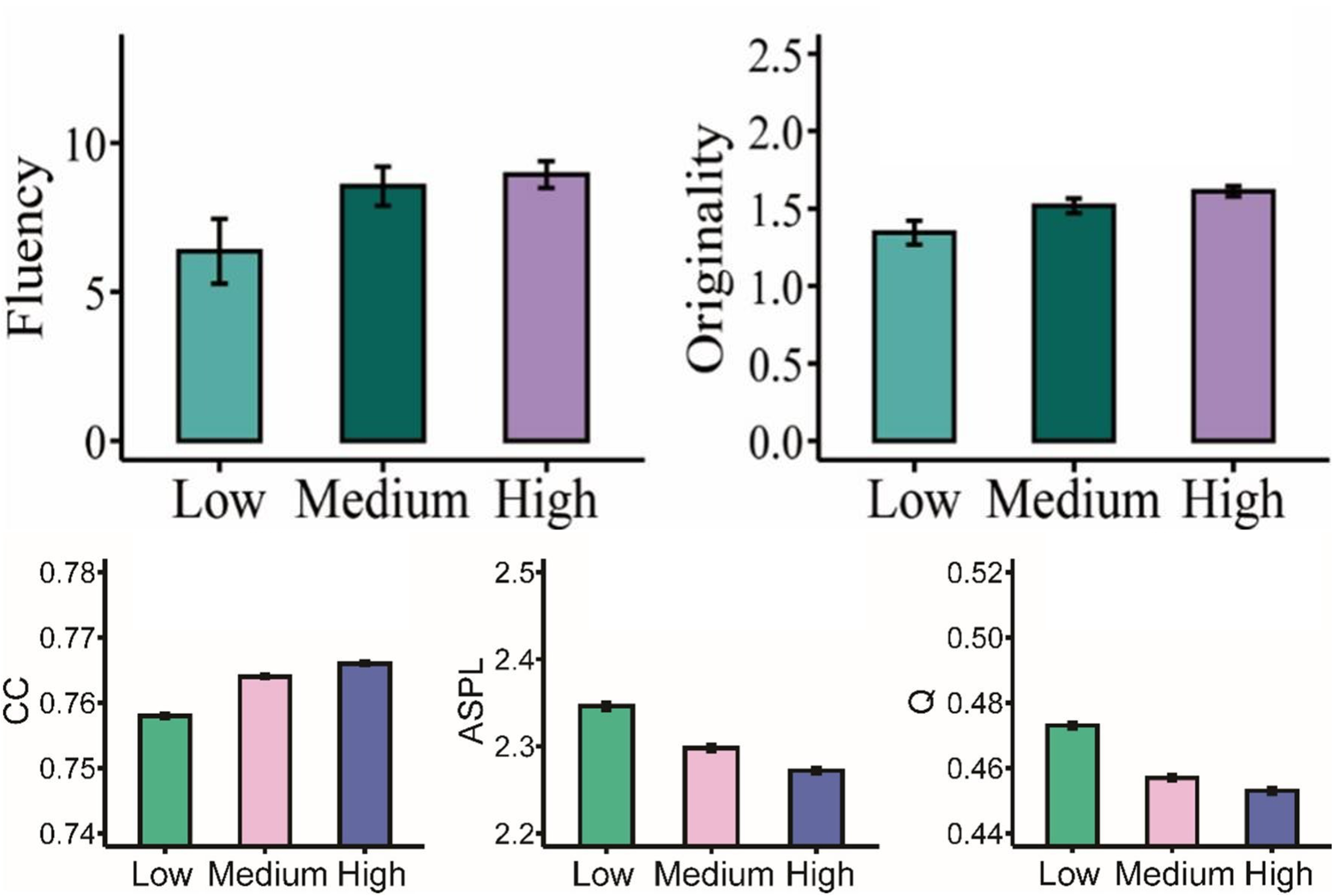

The role of semantic memory networks in crystallized intelligence and creative thinking ability

Li, Y., Beaty, R. E., Luchini, S., Hu, W., & Kenett, Y. N. (2024). The role of semantic memory networks in crystallized intelligence and creative thinking ability. Learning and Individual Differences, 111, 102426.

Crystallized intelligence has been found to support creativity. Yet, the mechanisms that link crystallized intelligence to creativity remain poorly understood. Across two studies, we demonstrate that crystallized intelligence is linked to creative ability as well as semantic memory network structure. We found similar semantic memory network structures that support creativity also underlie high crystallized intelligence. Our findings suggest that flexible access to semantic memory supports both verbal intelligence and creativity.

Read more

Read more

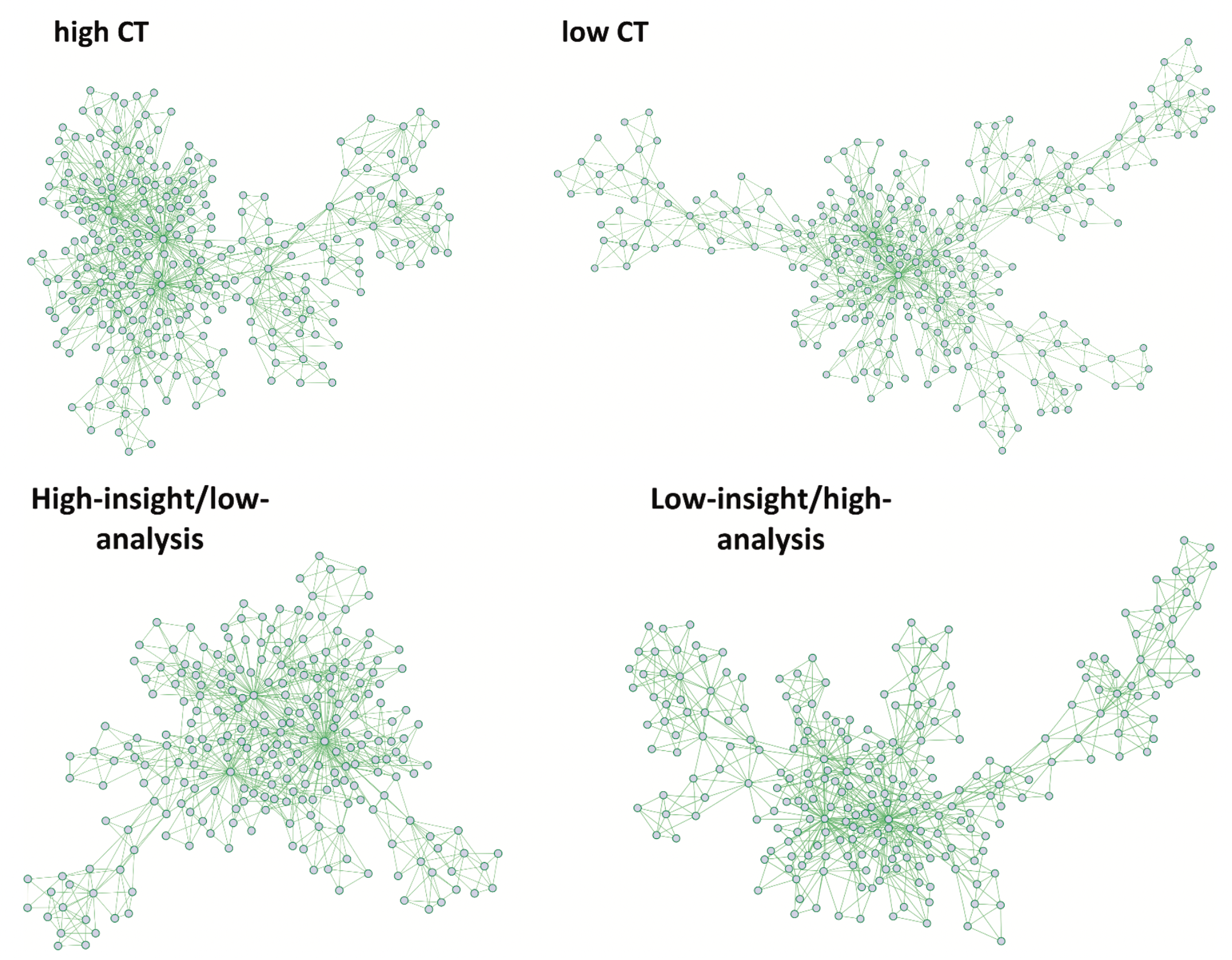

June 2023

Convergent thinking and insight problem solving relate to semantic memory network structure

Luchini, S., Kenett, Y. N., Zeitlen, D. C., Christensen, A. P., Ellis, D. M., Brewer, G. A., & Beaty, R. E. (2023). Convergent thinking and insight problem solving relate to semantic memory network structure. Thinking Skills and Creativity, 48, 101277.

Code and data available here.

Code and data available here.

The associative theory of creativity holds that creative thinking involves connecting remote concepts in semantic memory, yet, research has overlooked its applicability to convergent thinking and insight. Convergent thinking problems can be solved with insight (the sudden "aha" experience) or analysis (deliberately and incrementally working towards the solution). In this work, we used network science to compare semantic network structure across two grouping variables: 1) convergent thinking ability (i.e., problem accuracy), and 2) the tendency to solve problems with insight or analysis. Our findings show that convergent thinking ability is linked to flexible and interconnected semantic networks—with short paths and many connections between concepts. Moreover, participants who primarily solved problems with insight showed shorter average path distances between concepts, even after controlling for accuracy. Our results extend the literature on semantic memory and creativity by linking the organization of semantic memory to convergent thinking and insight.

Read more

Read more

April 2023

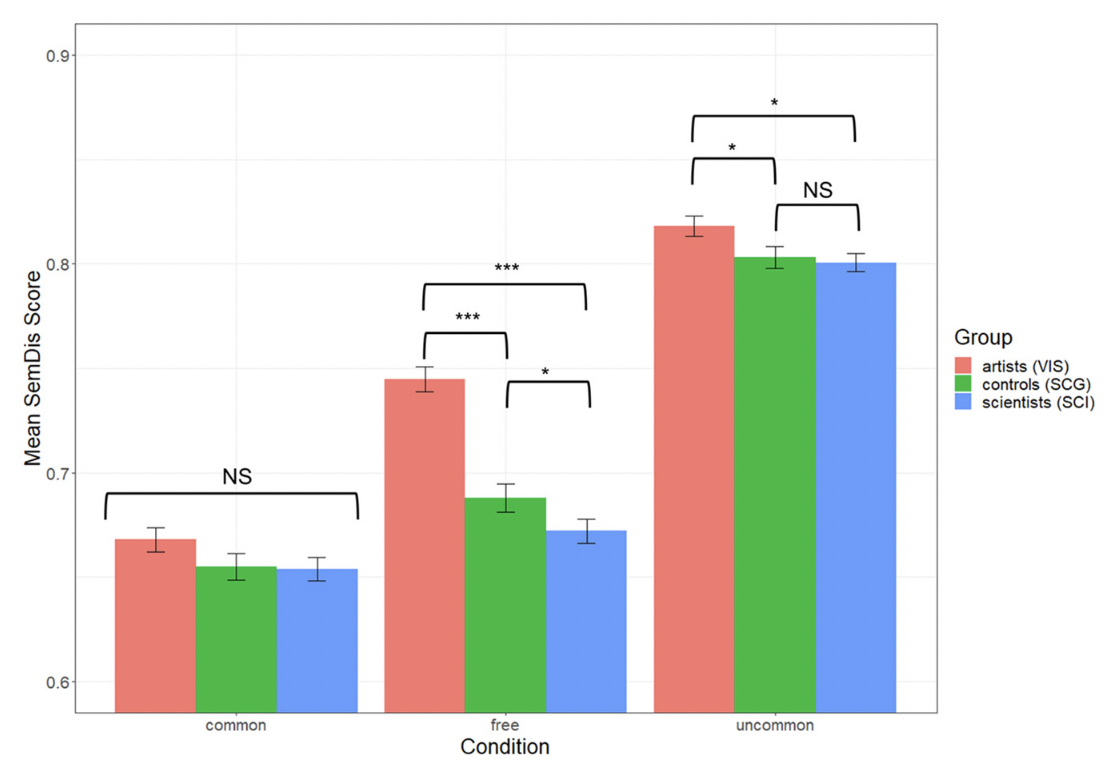

Free Association Ability Distinguishes Highly Creative Artists From Scientists: Findings From the Big-C Project

Merseal, H. M., Luchini, S., Kenett, Y. N., Knudsen, K., Bilder, R. M., & Beaty, R. E. (2025). Free association ability distinguishes highly creative artists from scientists: Findings from the Big-C Project. Psychology of Aesthetics, Creativity, and the Arts, 19(3), 495–504.

People's ability to connect remote associations allows them to form creative ideas. Past creativity research has almost exclusively focused on the general population, with far less work examining eminently-creative individuals across the arts and sciences. We analyzed data from the Big-C Project—a sample of world-renowned visual artists and scientists, and an intelligence-matched comparison group—and tested whether the ability to generate remote word associations differs as a function of creative expertise. We found an interaction between domain expertise and association condition: while artists generated more distant associations overall, this was particularly true for free associations (generating the first word that comes to mind). Visual artists spontaneously produced more remote associations but their creative expertise was less relevant for producing associations involving goal-directed cognitive search.

Read more

Read more

Creativity and AI

This line of research investigates the similarity and differences between creative behavior in humans and AI, as well as the outcomes of human-AI collaboration. Studies focus on modern AI systems, such as large language models and large multimodal models. I am particularly interested in exploring how creative idea generation and evaluation in AI compares to that of humans, developing AI with stronger creative abilities, and studying the mechanisms underlying human-AI co-creativity.

December 2025

Creative or Uncreative Partner: Comparing Humans and AI in Collaborative Creative Tasks

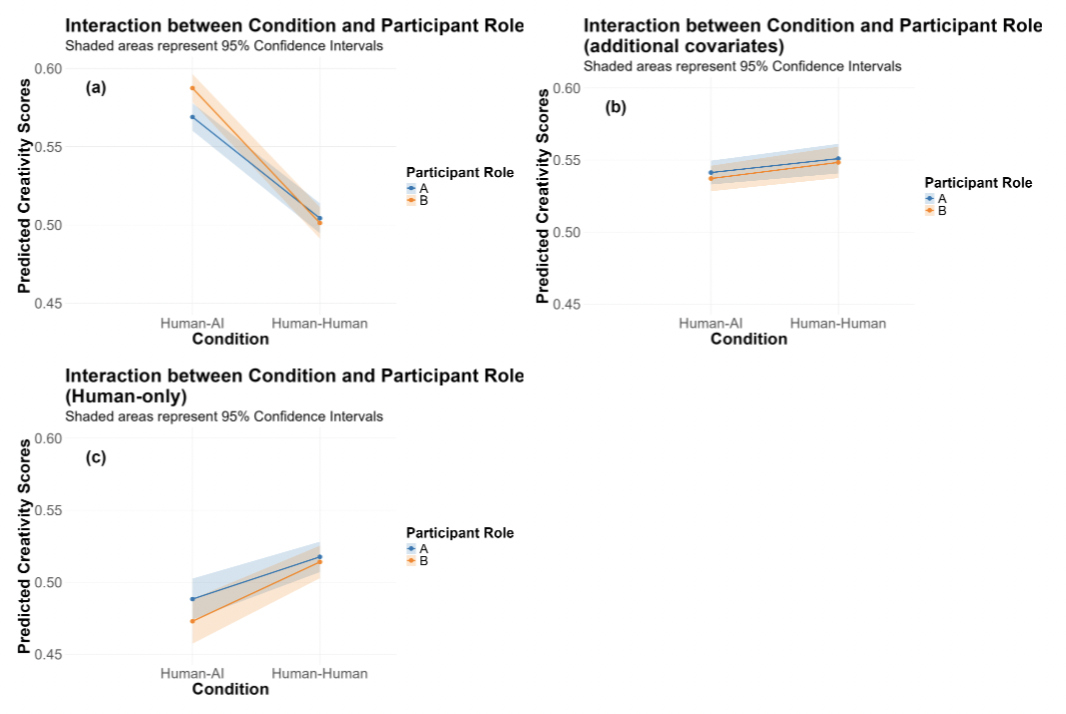

Luchini, S., Lauharatanahirun, N., & Beaty, R. (2025). Creative or Uncreative Partner: Comparing Humans and AI in Collaborative Creative Tasks. OSF.

As generative AI becomes increasingly integrated into creative work, understanding how AI reshapes collaboration has become critical. In this study, we directly compare human-human and human-AI collaboration across two creative tasks: the Alternative Uses Task (AUT) and creative short story writing. Participants were randomly assigned to pairs in either human-human (N= 68 pairs) or human-AI (GPT-4o; N= 72 pairs) conditions. Our findings reveal that the apparent "AI advantage" in creative collaboration is illusory, driven primarily by increased AI verbosity rather than enhanced creativity. Critically, collaboration with AI partners negatively impacted humans' own creative responses compared to human-human partnerships, with human-AI collaboration failing to enhance idea originality or diversity relative to human-human collaboration. Human partners demonstrated superior collaborative effectiveness that strengthened over time, indicating that current generative AI systems, while producing more verbose outputs, do not replicate the collective creativity characteristic of human-human collaboration.

Read more

Read more

November 2025

Generative AI Does Not Erase Individual Differences in Human Creativity

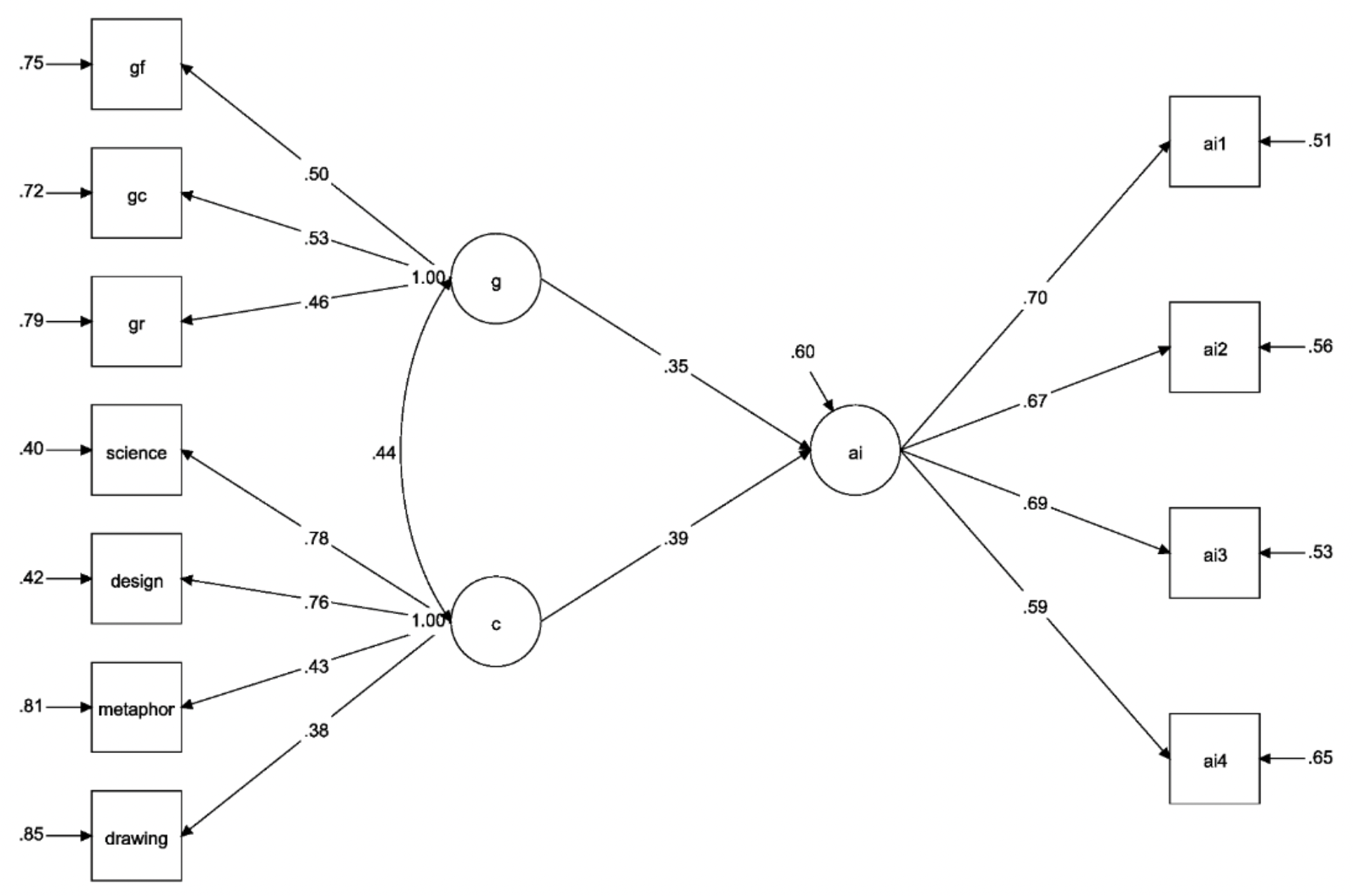

Luchini, S. A., Kaufman, J., & Beaty, R. Generative AI Does Not Erase Individual Differences in Human Creativity. OSF.

Code and data available here.

Code and data available here.

For over a century, researchers have documented robust individual differences in people's ability to think creatively. Today, however, generative AI appears to democratize creativity, potentially minimizing individual differences by offering powerful tools for anyone to generate ideas, stories, and more. Do individual differences still matter for creativity when everyone has access to the same advanced technology? Across two studies (N = 442), we tested whether two individual factors—creative ability (assessed via idea generation and story-writing tasks) and general intelligence (assessed via reasoning and knowledge tests)—predict performance in creative collaboration with large language models (LLMs). Study 1 found that baseline creative writing ability strongly predicted AI-assisted story writing with GPT-4o. Study 2 replicated and extended this finding, showing that people who were more creative and intelligent performed better on entirely different creative tasks done with AI assistance. Thus, people with more creative task expertise (Study 1) and those with higher baseline cognitive abilities (Study 2) produced more original ideas with AI assistance.

Read more

Read more

November 2025

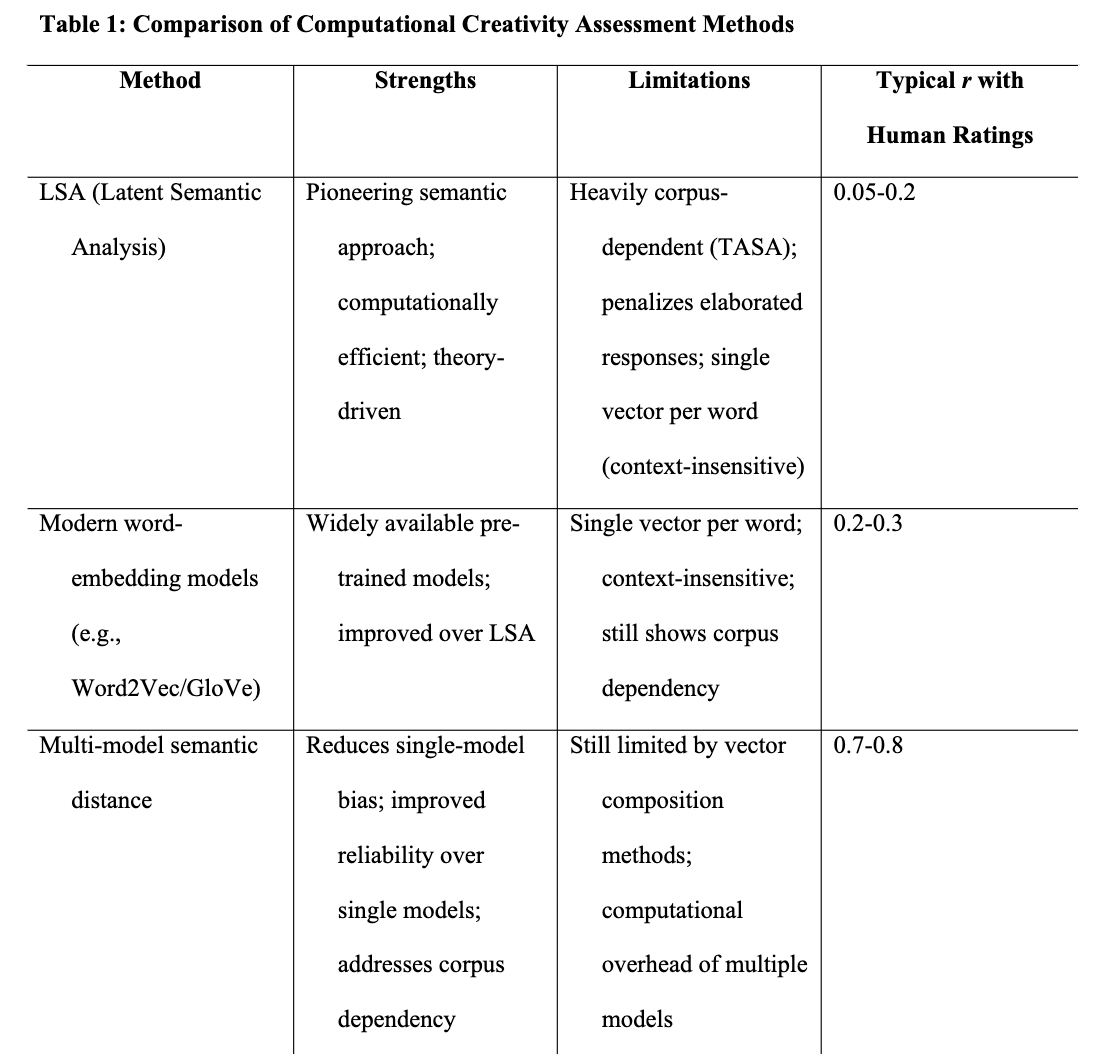

Automated Creativity Assessment: A Review of Methods, Challenges, and Future Prospects

Bahg, G., Luchini, S. A., & Beaty, R. Automated Creativity Assessment: A Review of Methods, Challenges, and Future Prospects. OSF.

In this review, we provide an overview of recent developments in computational creativity assessment methods, including techniques based on artificial intelligence (such as large language models) and semantic distance. Creativity assessment has historically relied on human raters to judge the quality of ideas and products. However, this approach is exceptionally costly due to the limited number of experts, the time and resources required for training them, and the high task burden. To overcome these barriers and expedite creativity research, computational automation of creativity assessment has been explored. We discuss the current challenges and potential future directions, such as improving the interpretability and explainability of automated scoring, and a complementary approach centered around evaluating mental processes leading to creativity.

Read more

Read more

August 2025

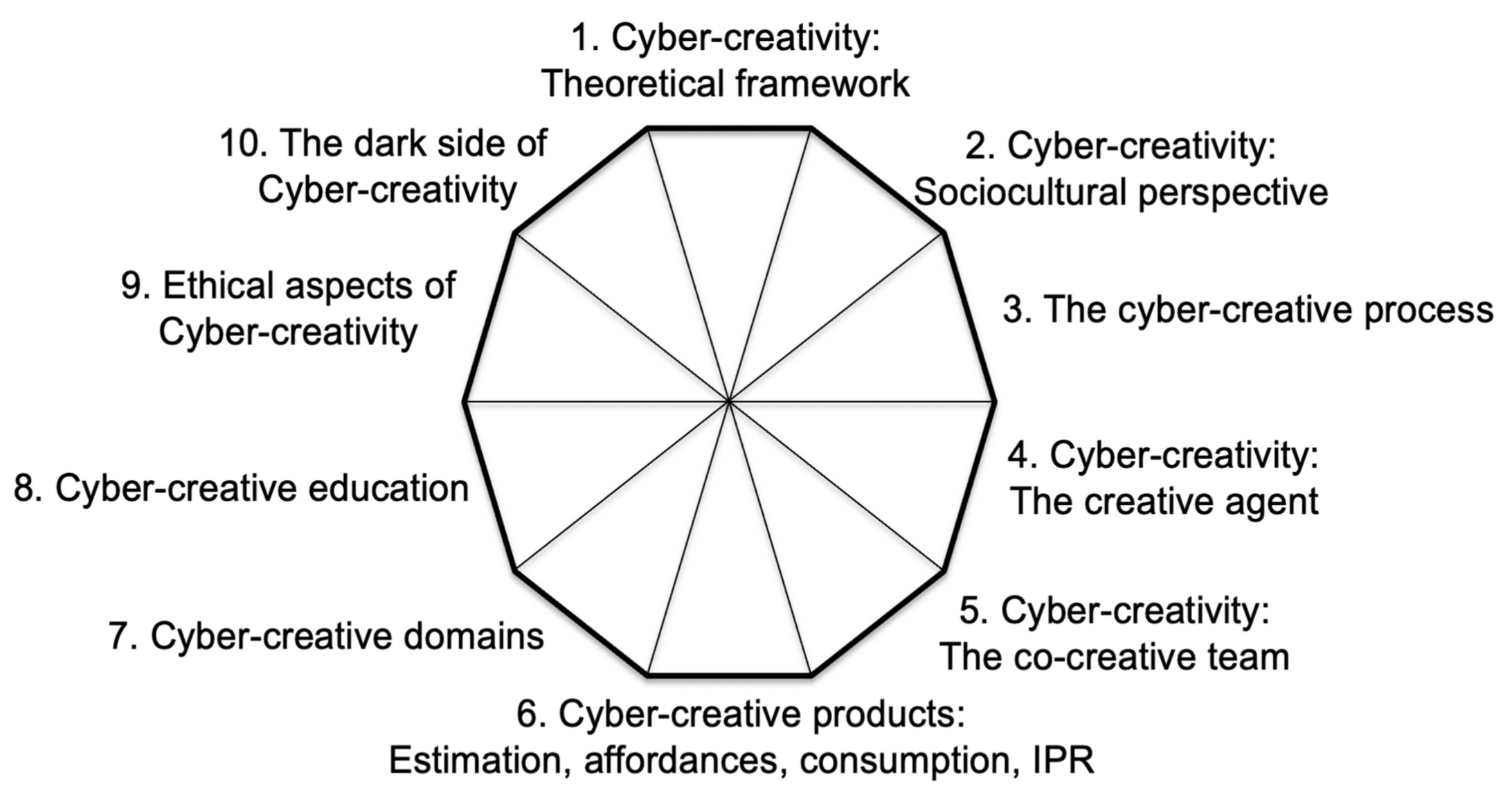

Cyber-Creativity: A Decalogue of Research Challenges

Corazza, G. E., Agnoli, S., Jorge Artigau, A., Beghetto, R. A., Bonnardel, N., Coletto, I., ... & Lubart, T. (2025). Cyber-creativity: A decalogue of research challenges. Journal of Intelligence, 13(8), 103.

In this article, we identify all forms of human–AI collaboration as cyber-creativity. We introduce the following two forward-looking scenarios: a utopian vision for cyber-creativity, in which AI serves to enhance and not replace human creativity, and a dystopian view associated with the pre-emption of all human creative agency caused by the rise of AI. In our view, the scientific community is called to bring its contribution, however small, to help humanity make steps towards the utopian scenario, while avoiding the dystopian one. Here, we present a decalogue of research challenges identified for this purpose, touching upon the following dimensions: (1) the theoretical framework for cyber-creativity; (2) sociocultural perspectives; (3) the cyber-creative process; (4) the creative agent; (5) the co-creative team; (6) cyber-creative products; (7) cyber-creative domains; (8) cyber-creative education; (9) ethical aspects; and (10) the dark side of cyber-creativity. For each dimension, a brief review of the state-of-the-art is provided, followed by the identification of a main research challenge, then specified into a list of research questions.

Read more

Read more

October 2025

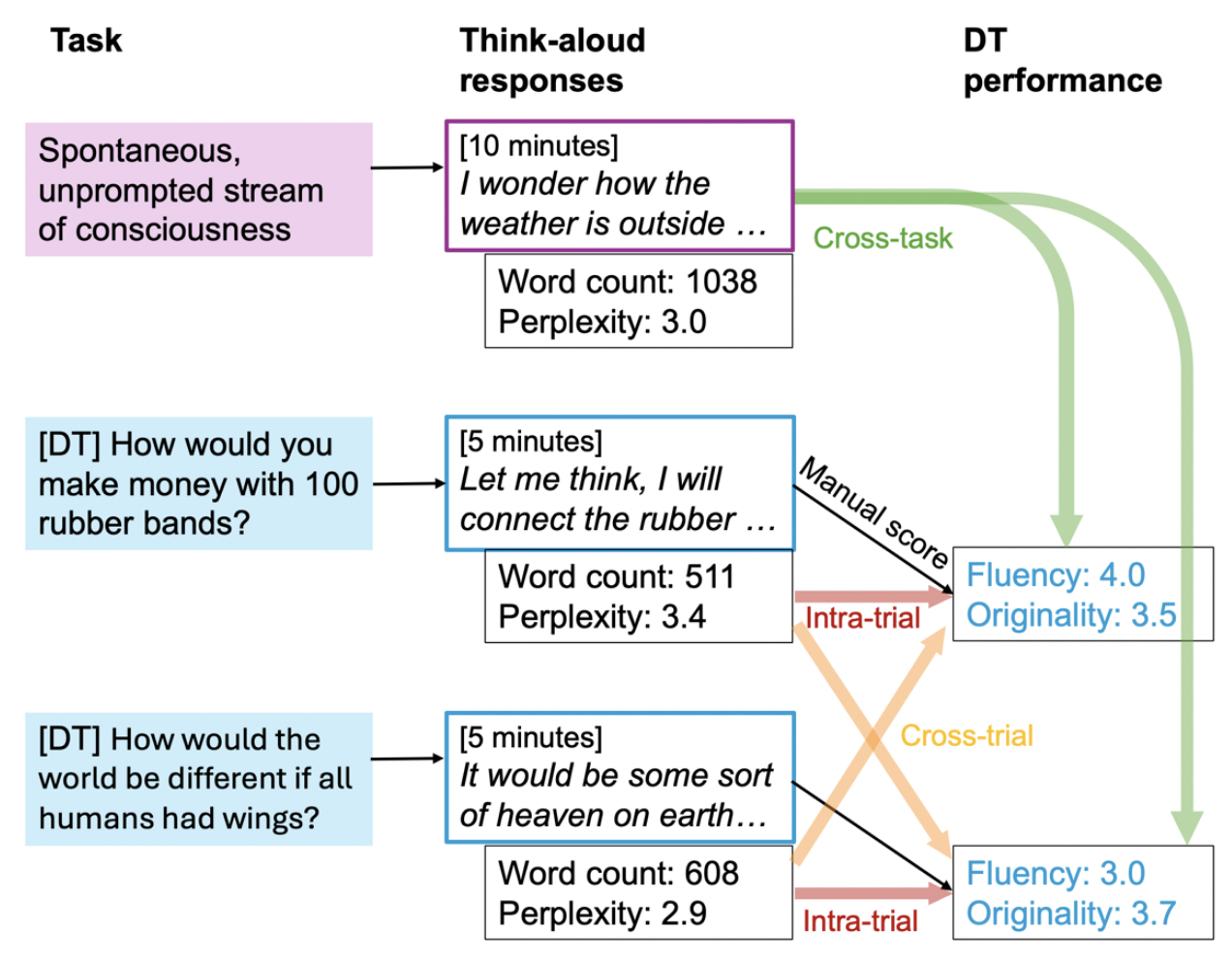

A pleasant surprise: perplexity from large language models assesses divergent thinking

Yu, Y., Raffaelli, Q., Luchini, S., Beaty, R. E., Andrews-Hanna, J. R., & Yu, Y. A pleasant surprise: perplexity from large language models assesses divergent thinking. OSF.

Divergent thinking (DT) is central to human innovation and creativity, yet its assessment remains largely constrained by lab-based tasks and a focus on final products, overlooking the rich information embedded in the thought process itself. We introduce perplexity—a computational measure of surprise derived from large language models—as a theoretically grounded DT measure that can be flexibly applied to verbal data in both process- and product-oriented contexts. Study 1 links the perplexity in participants' verbalized thought processes—during both goal-directed divergent thinking and unprompted stream of consciousness—with individuals' DT performance. We show that a higher level of surprising content (higher perplexity) predicts greater DT capacity. Study 2 focuses on creative products, validating perplexity as an automated measure of originality in solutions to open-ended, real-world problems. Together, these findings establish perplexity as a unified metric that bridges theories of creative cognition with naturalistic, verbal data. By providing a scalable measurement of DT via both participants' thought processes and creative products, our approach opens new avenues for scalable research on DT across diverse contexts.

Read more

Read more

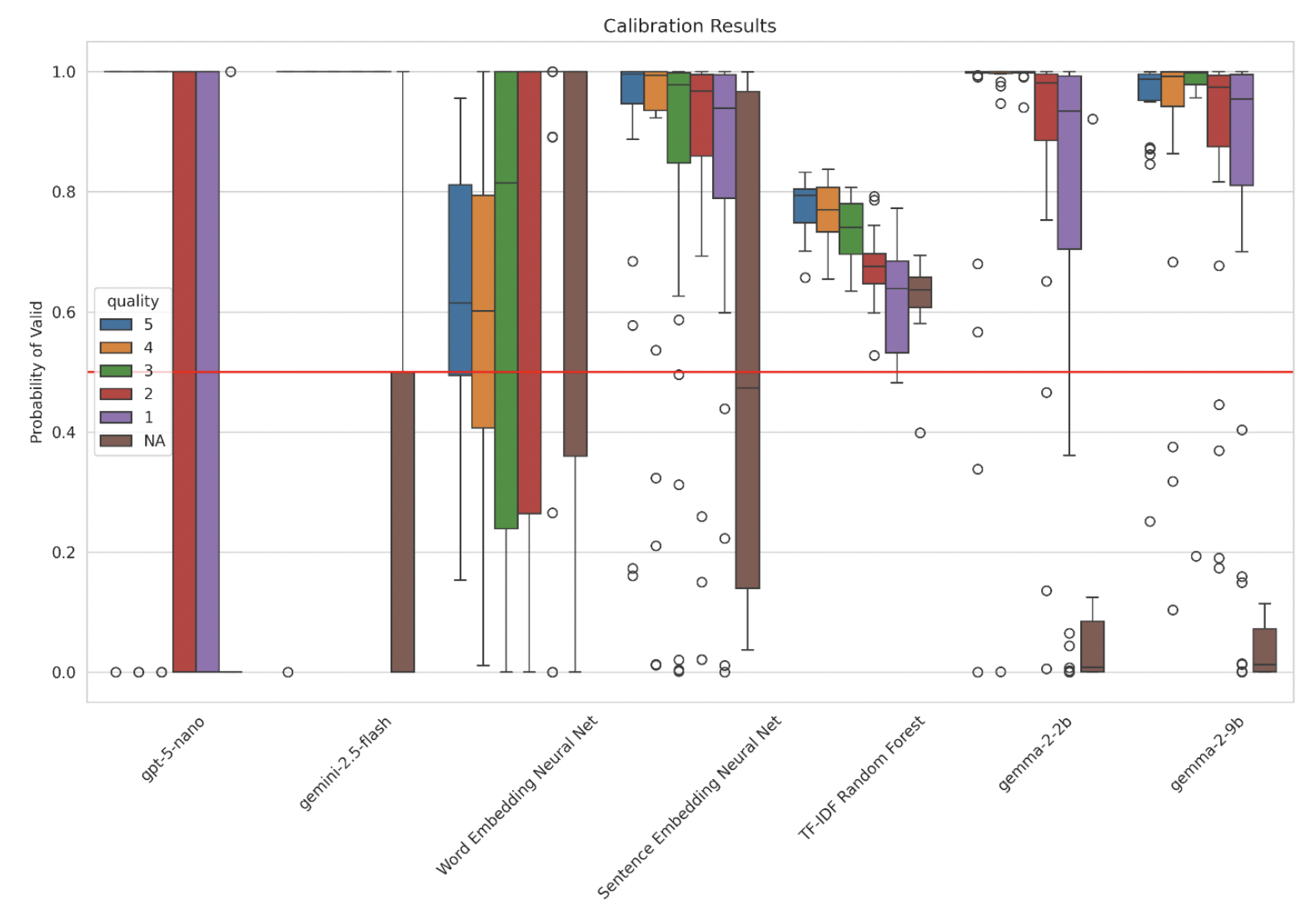

October 2025

Automated Detection of Invalid Responses to Creativity Assessments

Laverghetta, A., Luchini, S. A., Pronchick, J., & Beaty, R. (2025). Automated Detection of Invalid Responses to Creativity Assessments. OSF.

Participants in creativity studies sometimes produce invalid data that is unusable for analysis, such as nonsensical or incomplete responses to idea generation tasks. Identifying such responses is a time-consuming yet necessary process to ensure robust results but also remains challenging to automate. We explore the efficacy of transformer language models (TLMs) for automatically detecting invalid creativity responses. We train a suite of transformers to detect invalid data for two creativity assessments: the Alternate Uses Task (AUT) and a design problems task (DPT). We find that transformers generally outperform other baselines for both tasks. Further, we show that TLMs' predictions are well calibrated to the quality of the participant response, ensuring that model failures will occur in a predictable way. Finally, we conduct a fairness analysis based on language background—using an adversarial study where participants attempt to "break" the model by coming up with invalid responses that are nonetheless labeled valid. Our results demonstrate the potential of deep learning methods for cleaning creativity assessment data in a reliable and unbiased way.

Read more

Read more

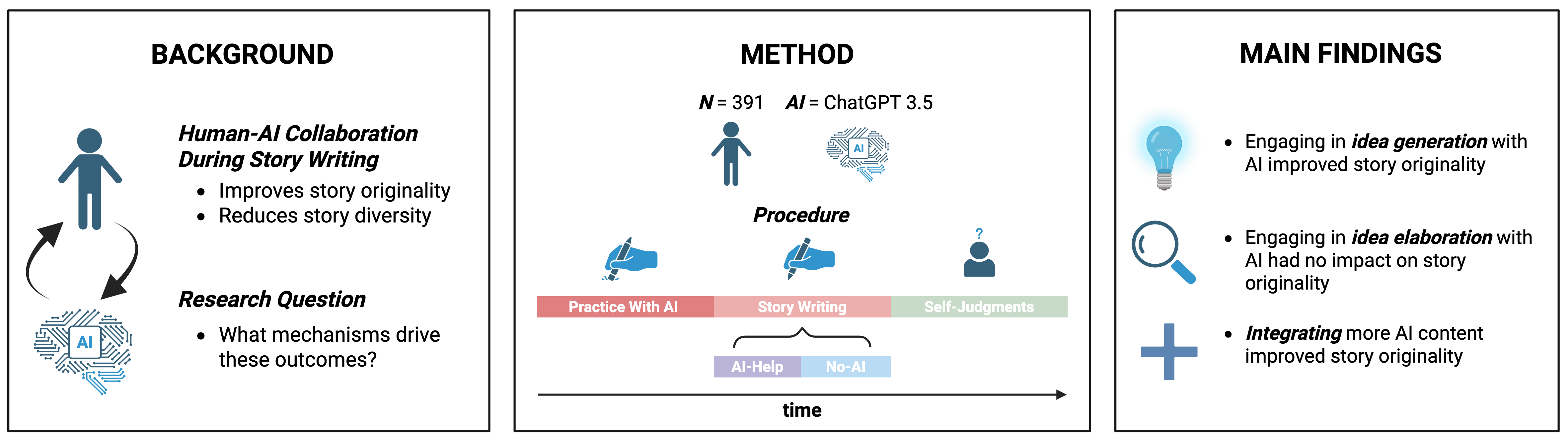

May 2025

The Roles of Idea Generation and Elaboration in Human-AI Collaborative Creativity

Luchini, S. A., Pronchick, J., Ceh, S. M., Kaufman, J. C., Johnson, D. R., Rafner, J., & Beaty, R. (2025). The Roles of Idea Generation and Elaboration in Human-AI Collaborative Creativity. PsyArXiv.

Data available here.

Data available here.

In this work, we explore how humans and AI can collaborate effectively in creative tasks. We investigate the distinct roles of idea generation and elaboration in human-AI collaborative creativity, examining how these processes interact and contribute to creative outcomes. Our findings reveal that while AI excels at generating diverse ideas, human creativity shines in the elaboration and refinement of these ideas. We demonstrate that the most successful creative outcomes emerge when humans and AI work together, leveraging their complementary strengths. This research provides insights into optimizing human-AI collaboration for creative tasks and suggests new directions for developing AI systems that better support human creativity.

Read more

Read more

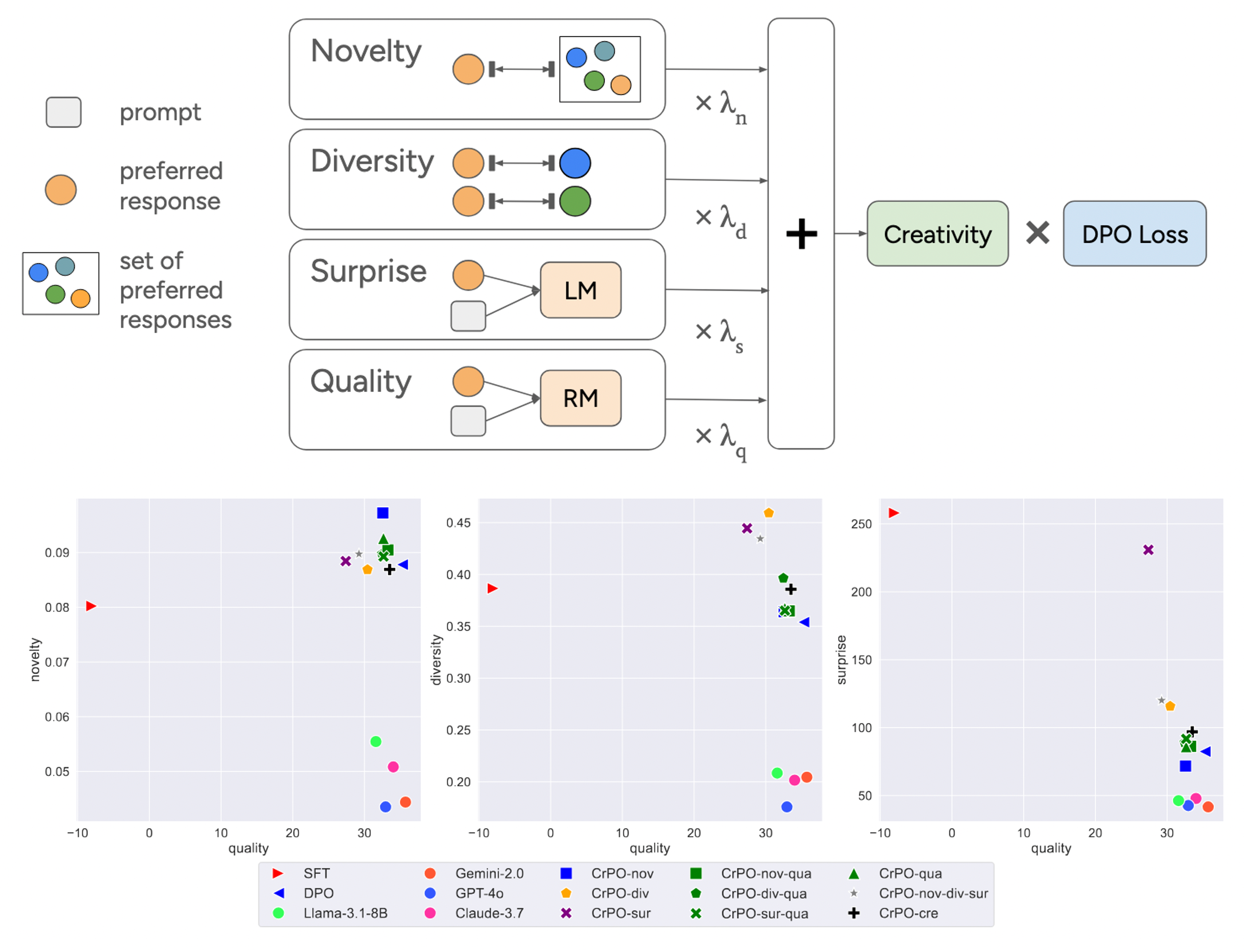

May 2025

Creative Preference Optimization

Ismayilzada, M., Laverghetta Jr, A., Luchini, S. A., Patel, R., Bosselut, A., van der Plas, L., & Beaty, R. (2025). Creative Preference Optimization. Findings of the Association for Computational Linguistics: EMNLP. 9580-9609.

Code and data available here.

Code and data available here.

While LLMs have shown impressive performance on many natural language tasks they still struggle with creativity. Past attempts at enhancing LLM creativity have narrowly focused on improving their outputs' diversity on very specific tasks. In our work, we introduce Creative Preference Optimization, an alignment method that injects signals from multiple creativity dimensions (novelty, quality, diversity, and surprise) to guide the LLMs output. We compiled a large dataset of 200k+ human responses to creativity tasks, rated on their creativity by trained human judges. We used this large dataset to train a model, which was found to outperform several baselines including GPT-4o on creativity tests. Our findings show that creative preference optimization is a promising direction for boosting LLM creativity without compromising output quality.

Read more

Read more

June 2025

Automating Creativity Assessment in Engineering Design: A Psychometric Validation of AI-Generated Design Problems

Luchini, S., Beaty, R., Boyce, A., Zappe, S., & Forthmann, B. (2025). Automating creativity assessment in engineering design: A psychometric validation of AI-generated design problems. OSF.

Creativity is essential for engineering design, yet its assessment remains challenging due to the resource-intensive nature of traditional evaluation methods. This study investigates the potential of automatic item generation (AIG) using large language models (LLMs) to create psychometrically sound assessment items for measuring creative thinking in engineering. We developed and validated engineering design problems across three domains: ability difference and limitations (e.g., assisting people with learning impairments), transportation and mobility (e.g., reducing traffic congestion in mega cities), and social environments and systems (e.g., improving access to clean water in remote areas). Results demonstrated that LLM-generated items achieved comparable or higher content validity rates compared to expert-written items (43% vs. 20% success rate). Bayesian confirmatory factor analysis supported a unidimensional model for fluency, originality, and effectiveness scores, with excellent reliability estimates (ranging from .92 to .95). The present research advances our understanding of automated assessment generation in engineering education, provides empirical evidence for the psychometric properties of AI-generated creativity tasks, and offers a scalable approach for measuring creative thinking in engineering classrooms.

Read more

Read more

March 2025

Automated scoring of creativity in multilingual narratives

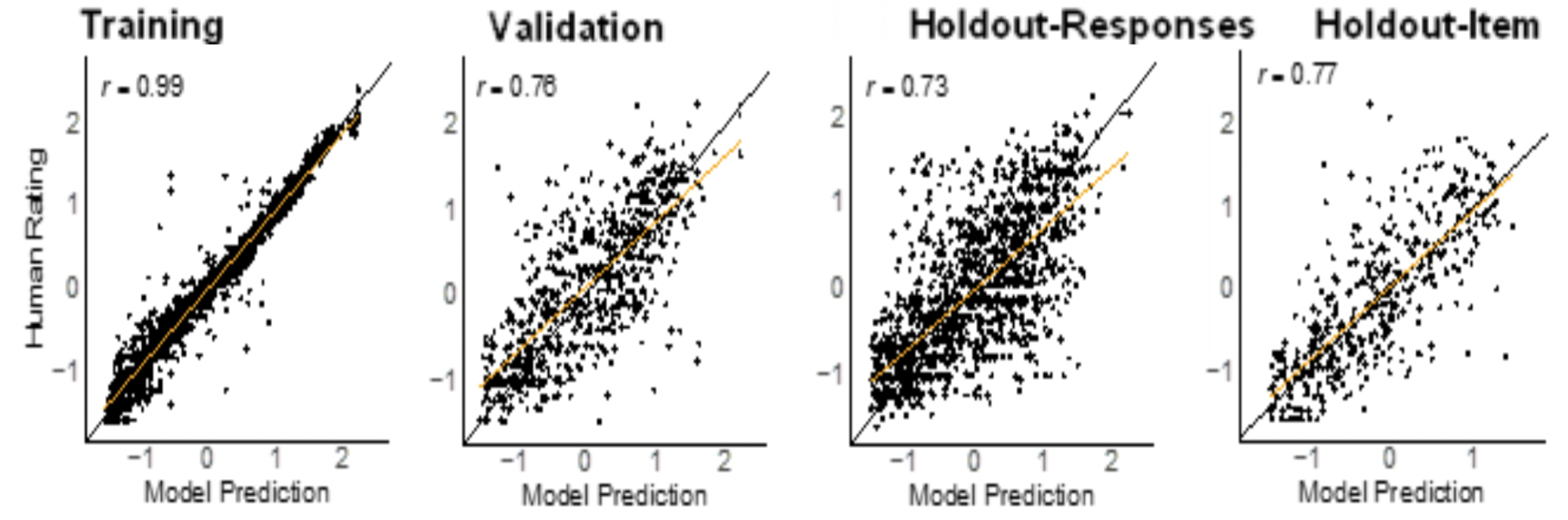

Luchini, S. A., Moosa, I. M., Patterson, J. D., Johnson, D., Baas, M., Barbot, B., ... & Beaty, R. E. (2025). Automated assessment of creativity in multilingual narratives. Psychology of Aesthetics, Creativity, and the Arts.

Code and data available here.

Code and data available here.

LLMs have shown remarkable success on creativity tasks, yet they have not been applied to scoring multilingual creativity data or narratives. In this work, our goal was to develop an LLM that could score the originality of narratives across 11 different languages. We fine-tuned RoBERTa-base on multilingual stories translated into English, and found it strongly predicted human originality ratings (r ≥ .73). We also fine-tuned XLM-RoBERTa on the same stories, in their original language, and found that it also reliably predicted human originality scores (r ≥ .72). We thus demonstrated that LLMs can successfully score narrative creativity in 11 different languages, surpassing the performance of the best previous automated scoring techniques (e.g., semantic distance). This work represents the first effective, accessible, and reliable solution for the automated scoring of creativity in multilingual narratives.

Read more

Read more

March 2025

Automated scoring of creative problem solving with large language models: A comparison of originality and quality ratings

Luchini, S. A., Maliakkal, N. T., DiStefano, P. V., Laverghetta Jr, A., Patterson, J. D., Beaty, R. E., & Reiter-Palmon, R. (2025). Automated scoring of creative problem solving with large language models: A comparison of originality and quality ratings. Psychology of Aesthetics, Creativity, and the Arts.

Code and data available here.

Code and data available here.

Creative problem solving is a naturalistic form of creative thinking involving the generation of solutions that are not only original but also of high quality (i.e., plausible and effective). We examine whether both originality and quality can be automatically scored for a naturalistic creativity task, by two open-source LLMs. We gathered data from 10 studies, amounting to 3,243 participants who completed different items of the creative problem-solving task. We fine-tuned two LLMs, RoBERTa and GPT-2, and few-shot prompted two separate LLMs, Claude and Llama, to predict human ratings of originality and quality on the CPST. We found that RoBERTa and GPT-2 models predict human ratings of solution quality (RoBERTa, r = .83; GPT-2, r = .83) better than solution originality (RoBERTa, r = .79; GPT-2, r = .80). Few-shot prompting was less effective than fine-tuning at predicting both originality (r = .66–.11) and quality (r = .62–.26). We show for the first time that naturalistic creativity tasks can be automatically scored for both originality and quality.

Read more

Read more

August 2024

The creative psychometric item generator: a framework for item generation and validation using large language models

Laverghetta Jr, A., Luchini, S., Linell, A., Reiter-Palmon, R., & Beaty, R. (2024). The creative psychometric item generator: a framework for item generation and validation using large language models. arXiv.

Increasingly, large language models (LLMs) are being used to automate workplace processes requiring a high degree of creativity. While much prior work has examined the creativity of LLMs, there has been little research on whether they can generate valid creativity assessments for humans despite the increasingly central role of creativity in modern economies. We develop a psychometrically inspired framework for creating test items (questions) for a classic free-response creativity test: the creative problem-solving (CPS) task. Our framework, the creative psychometric item generator (CPIG), uses a mixture of LLM-based item generators and evaluators to iteratively develop new prompts for writing CPS items, such that items from later iterations will elicit more creative responses from test takers. We find strong empirical evidence that CPIG generates valid and reliable items and that this effect is not attributable to known biases in the evaluation process. Our findings have implications for employing LLMs to automatically generate valid and reliable creativity tests for humans and AI.

Read more

Read more

February 2024

Bridging the measurement gap: A large language model method of assessing open-ended question complexity

Raz, T., Luchini, S., Beaty, R., & Kenett, Y. (2024). Bridging the measurement gap: A large language model method of assessing open-ended question complexity. In Proceedings of the Annual Meeting of the Cognitive Science Society (Vol. 46).

Model available here.

Model available here.

Question-asking, an essential yet often understudied activity, holds significant implications for fields such as learning, creativity, and cognitive development. Question complexity has been found to be a crucial factor affecting question quality level. However, assessing the complexity of questions, and especially open-ended questions, remains a methodological challenge. In this study, we develop a computational model that automatically scores the complexity of open-ended questions. Our results reveal that our LLM-generated Bloom scores correlated strongly with human ratings of complexity (r = .73), whilst also greatly exceeding baseline methods tested. The research emphasizes the significance of LLM in automating the assessment of open-ended question complexity, fostering cost-effective, automatic, and reliable measurements in this domain.

Read more

Read more